Prime 25 Quotes On Deepseek

본문

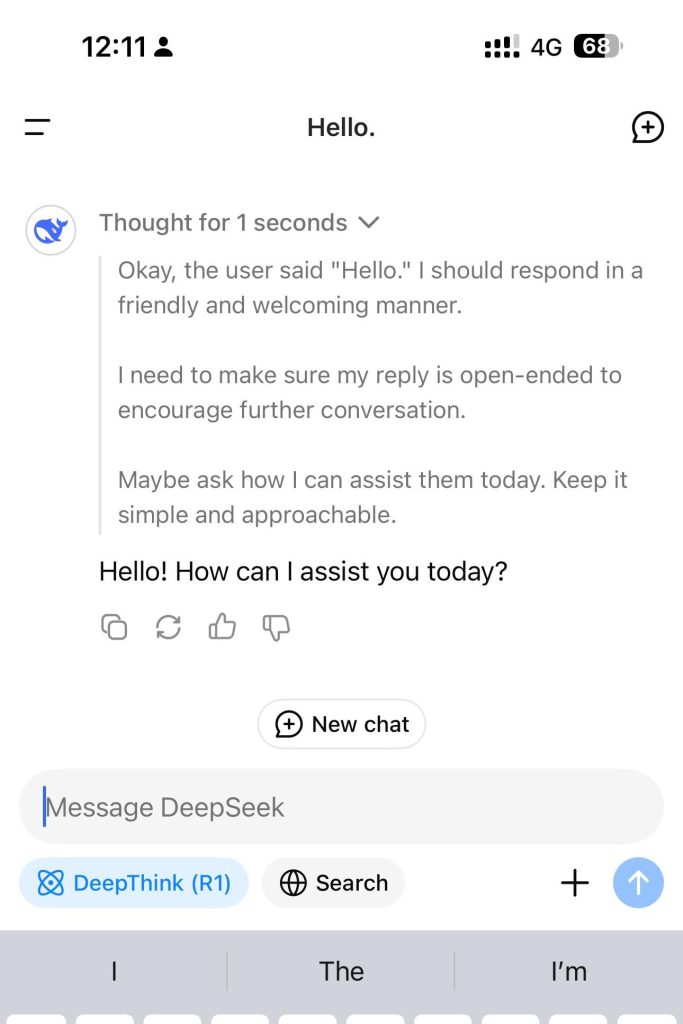

???? What makes DeepSeek R1 a recreation-changer? We replace our DEEPSEEK to USD value in real-time. × value. The corresponding fees might be directly deducted out of your topped-up stability or granted steadiness, with a desire for utilizing the granted steadiness first when both balances are available. And perhaps extra OpenAI founders will pop up. "Lean’s comprehensive Mathlib library covers numerous areas equivalent to evaluation, algebra, geometry, topology, combinatorics, and probability statistics, enabling us to achieve breakthroughs in a more general paradigm," Xin stated. AlphaGeometry additionally uses a geometry-particular language, while DeepSeek-Prover leverages Lean’s complete library, which covers diverse areas of arithmetic. On the more difficult FIMO benchmark, deepseek ai china-Prover solved 4 out of 148 issues with a hundred samples, whereas GPT-four solved none. Why this issues - brainlike infrastructure: While analogies to the brain are often misleading or tortured, there is a useful one to make right here - the type of design idea Microsoft is proposing makes massive AI clusters look extra like your mind by essentially decreasing the quantity of compute on a per-node basis and significantly growing the bandwidth out there per node ("bandwidth-to-compute can improve to 2X of H100). For those who take a look at Greg Brockman on Twitter - he’s similar to an hardcore engineer - he’s not someone that's just saying buzzwords and whatnot, and that attracts that kind of people.

"We consider formal theorem proving languages like Lean, which provide rigorous verification, symbolize the way forward for mathematics," Xin said, pointing to the growing pattern within the mathematical community to make use of theorem provers to confirm advanced proofs. "Despite their apparent simplicity, these issues usually contain complex resolution techniques, making them excellent candidates for constructing proof information to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write. Instruction-following analysis for giant language models. Noteworthy benchmarks comparable to MMLU, CMMLU, and C-Eval showcase distinctive outcomes, showcasing DeepSeek LLM’s adaptability to numerous evaluation methodologies. The reproducible code for the following analysis outcomes may be found in the Evaluation directory. These GPTQ models are identified to work in the next inference servers/webuis. I assume that almost all individuals who still use the latter are newbies following tutorials that have not been up to date yet or presumably even ChatGPT outputting responses with create-react-app as a substitute of Vite. If you don’t consider me, just take a learn of some experiences people have taking part in the game: "By the time I finish exploring the extent to my satisfaction, I’m degree 3. I've two food rations, a pancake, and a newt corpse in my backpack for food, and I’ve found three more potions of various colors, all of them nonetheless unidentified.

"We consider formal theorem proving languages like Lean, which provide rigorous verification, symbolize the way forward for mathematics," Xin said, pointing to the growing pattern within the mathematical community to make use of theorem provers to confirm advanced proofs. "Despite their apparent simplicity, these issues usually contain complex resolution techniques, making them excellent candidates for constructing proof information to enhance theorem-proving capabilities in Large Language Models (LLMs)," the researchers write. Instruction-following analysis for giant language models. Noteworthy benchmarks comparable to MMLU, CMMLU, and C-Eval showcase distinctive outcomes, showcasing DeepSeek LLM’s adaptability to numerous evaluation methodologies. The reproducible code for the following analysis outcomes may be found in the Evaluation directory. These GPTQ models are identified to work in the next inference servers/webuis. I assume that almost all individuals who still use the latter are newbies following tutorials that have not been up to date yet or presumably even ChatGPT outputting responses with create-react-app as a substitute of Vite. If you don’t consider me, just take a learn of some experiences people have taking part in the game: "By the time I finish exploring the extent to my satisfaction, I’m degree 3. I've two food rations, a pancake, and a newt corpse in my backpack for food, and I’ve found three more potions of various colors, all of them nonetheless unidentified.

Remember to set RoPE scaling to four for appropriate output, extra discussion could possibly be discovered in this PR. Could you could have extra benefit from a bigger 7b model or does it slide down an excessive amount of? Note that the GPTQ calibration dataset is just not the same because the dataset used to train the model - please seek advice from the original mannequin repo for particulars of the coaching dataset(s). Jordan Schneider: Let’s start off by speaking by way of the elements which can be necessary to train a frontier model. DPO: They further train the model utilizing the Direct Preference Optimization (DPO) algorithm. As such, there already seems to be a brand new open source AI model chief just days after the final one was claimed. "Our rapid goal is to develop LLMs with robust theorem-proving capabilities, aiding human mathematicians in formal verification tasks, such because the current challenge of verifying Fermat’s Last Theorem in Lean," Xin mentioned. "A major concern for the future of LLMs is that human-generated information could not meet the rising demand for top-high quality data," Xin mentioned.

K), a decrease sequence length may have to be used. Note that a lower sequence length does not limit the sequence size of the quantised mannequin. Note that utilizing Git with HF repos is strongly discouraged. The launch of a new chatbot by Chinese artificial intelligence firm DeepSeek triggered a plunge in US tech stocks because it appeared to carry out as well as OpenAI’s ChatGPT and other AI fashions, however using fewer assets. This contains permission to entry and use the supply code, in addition to design paperwork, for constructing purposes. How to make use of the deepseek-coder-instruct to finish the code? Although the deepseek-coder-instruct models are not particularly skilled for code completion duties throughout supervised nice-tuning (SFT), they retain the capability to carry out code completion successfully. 32014, versus its default worth of 32021 within the deepseek-coder-instruct configuration. The Chinese AI startup sent shockwaves through the tech world and caused a close to-$600 billion plunge in Nvidia's market value. DeepSeek, the AI offshoot of Chinese quantitative hedge fund High-Flyer Capital Management, has officially launched its latest model, DeepSeek-V2.5, an enhanced model that integrates the capabilities of its predecessors, DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724.

K), a decrease sequence length may have to be used. Note that a lower sequence length does not limit the sequence size of the quantised mannequin. Note that utilizing Git with HF repos is strongly discouraged. The launch of a new chatbot by Chinese artificial intelligence firm DeepSeek triggered a plunge in US tech stocks because it appeared to carry out as well as OpenAI’s ChatGPT and other AI fashions, however using fewer assets. This contains permission to entry and use the supply code, in addition to design paperwork, for constructing purposes. How to make use of the deepseek-coder-instruct to finish the code? Although the deepseek-coder-instruct models are not particularly skilled for code completion duties throughout supervised nice-tuning (SFT), they retain the capability to carry out code completion successfully. 32014, versus its default worth of 32021 within the deepseek-coder-instruct configuration. The Chinese AI startup sent shockwaves through the tech world and caused a close to-$600 billion plunge in Nvidia's market value. DeepSeek, the AI offshoot of Chinese quantitative hedge fund High-Flyer Capital Management, has officially launched its latest model, DeepSeek-V2.5, an enhanced model that integrates the capabilities of its predecessors, DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724.

댓글목록0

댓글 포인트 안내